ABSTRACT

This paper explains that Taiwan's agricultural management policies have long used cadastral maps as the foundational geographic data for farmland statistics. However, cadastral maps lack the flexibility to reflect changes in crop cultivation boundaries. The Department of Information Technology, Ministry of Agriculture of Taiwan, has developed agricultural parcel maps based on aerial imagery showing farmland cultivation patterns. By implementing a Bi-Directional Cascade Network (BDCN) edge detection model integrated into an ArcGIS Pro add-in tool, the system automates parcel boundary detection. This innovation resolves the historical challenges of high labor costs and time pressure when manually updating parcel boundaries using new aerial imagery. Experimental results from 2,408 parcels in Wandan Township, Pingtung County, demonstrate a 79.5% precision rate, 92.3% recall rate, and an F1 score of 85.4%. For nationwide updates across 2.7 million parcels, only 351,000 parcels (13% of the total) require manual verification. This study confirms that integrating geographic information systems (GIS) with deep learning enhances the timeliness of agricultural surveys, establishing a technical foundation for farmland management. Future work will employ deep learning to develop Artificial Intelligence (AI) recognition models for long-term crops and major crops, enabling rapid and precise crop monitoring and management.

Keywords: BDCN, agricultural parcel, GIS, ArcGIS add-in tool, agricultural management

INTRODUCTION

Taiwan's agricultural policies have historically relied on cadastral maps as the primary geographic reference. While cadastral maps serve multiple functions—such as land ownership management and urban-rural planning—they inadequately capture the diversity and dynamic changes in crop cultivation. To accurately and promptly obtain agricultural production information, policies are shifting toward parcel-based farmland surveys.

A parcel is defined as the smallest contiguous area visible in aerial imagery that cultivates a single crop. Compared to cadastral maps, parcels more accurately reflect actual farmland use and provide higher precision in agricultural statistics. Cadastral boundaries often diverge from real-world cultivation practices due to variations in crop growth cycles and farmers' adjustments to planting strategies based on market conditions. Consequently, crop area estimates derived from cadastral maps may deviate from actual cultivation areas. Agricultural parcel maps not only offer detailed farmland data but also integrate with modern technologies like remote sensing imagery and GIS tools to achieve faster and more accurate land-use information.

In 2021, the Department of Information Technology (DIT), Ministry of Agriculture (MOA), initiated the development of Taiwan's first nationwide agricultural parcel map. Using cadastral maps and national land use survey maps as the base, technicians overlaid aerial imagery and manually revised boundaries to align with crop cultivation patterns, resulting in approximately 2.7 million parcels (Agricultural Engineering Research Center, 2021). This process demanded substantial time, labor, and technical precision. To address annual changes in farmland characteristics, a deep learning-based edge detection model (the GEO-AI tool) was developed in 2022 as an ArcGIS Pro add-in tool. The GEO-AI tool identifies parcels requiring manual updates from the national dataset, allowing staff to verify and edit boundaries based on detection results (Interactive Digital Technologies Inc., 2023). This hybrid approach—combining automated detection with manual verification—improves efficiency (Lin & Zhang, 2021) while ensuring the accuracy of agricultural parcel maps, enabling timely reflection of actual land use.

The accuracy of the GEO-AI tool directly impacts the quality of each agricultural parcel map version. By collecting misjudged and overlooked cases during revisions and retraining the model with annotated training data, the tool's interpretive capabilities are continuously enhanced. This iterative process allows the GEO-AI tool to identify future changes in land use more precisely. In summary, the DIT of MOA aims not only to establish a national agricultural parcel map but also to create a sustainable system for ongoing maintenance and updates. Through the GEO-AI tool, systematic data collection, and model refinement, Taiwan's agricultural resources can be managed more effectively, laying a robust foundation for agricultural modernization and sustainable development.

DATA PROCESSING AND FUNCTION DEVELOPMENT

To develop the parcel map verification tool, before model training, we utilized DMCIII imagery provided by the Aerial Survey and Remote Sensing Branch, Forestry and Nature Conservation Agency, Ministry of Agriculture. The images were captured between December 6, 2022, and April 4, 2023, covering 40 frames at a scale of 1:5,000 across six counties/cities: Kaohsiung City, Hsinchu County, Changhua County, Yunlin County, Pingtung County, and Hualien County. The total number of parcels included was 50,073.

For this model development, the DIT of MOA primarily employed the Bi-Directional Cascade Network (BDCN) method. BDCN is a deep learning-based edge detection technology that mimics the sharp vision of an eagle, precisely identifying boundaries in imagery. Inspired by the human visual system—which effortlessly discerns objects at varying distances and clarity levels—BDCN simultaneously processes edges across multiple scales and resolutions. Unlike traditional single-scale detection methods, BDCN acts like wearing multiple pairs of glasses with different prescriptions, ensuring no edge escapes detection (He et al., 2019).

The BDCN architecture is built upon a VGG16-based Convolutional Neural Network (CNN), divided into hierarchical convolutional layers, also known as convolutional blocks. Each block extracts distinct features from the imagery, progressively deepening the network's understanding. Additionally, BDCN incorporates a Scale Enhancement Module (SEM), functioning as a "zoom lens" to adjust observation scales. Through the SEM, the model extracts features at varying resolutions, enabling precise boundary identification (Esri, n.d.).

By integrating BDCN with ArcGIS and combining deep learning resources with a graphical interface, the technical barrier for edge detection is significantly lowered. This integration allows users to accurately identify land feature boundaries—such as agricultural parcels—while leveraging ArcGIS's spatial analysis capabilities. Through the graphical interface, users can intuitively perform parcel map verification, delineation, area measurement, and change analysis, thereby enhancing workflow efficiency and output quality. This technology proves particularly valuable for applications requiring precise land boundary extraction and detailed spatial analysis.

Data pre-processing

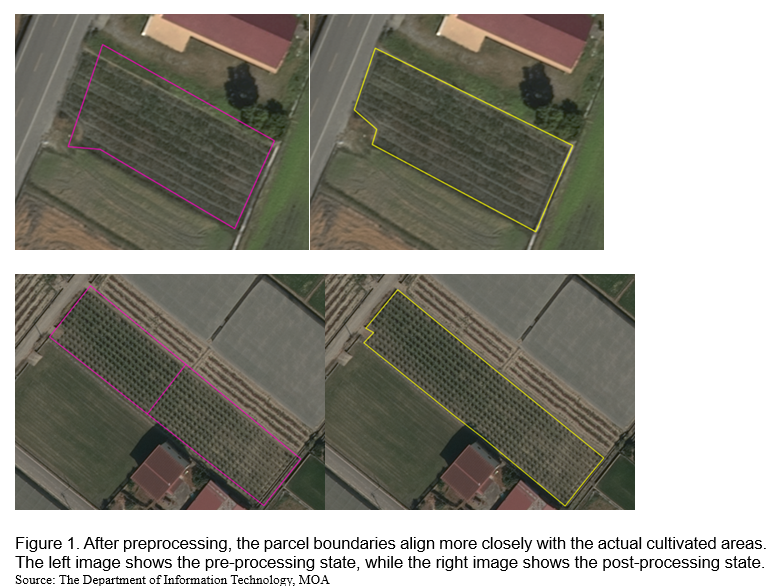

In the model training pipeline, the data preprocessing stage is critical. This phase focuses on enhancing features related to agricultural parcel boundaries in the imagery, such as field ridges, roads, and crop division lines. Clearer boundary features improve model recognition accuracy (Du et al., 2019). Specifically:

- Edge Visibility Assessment: The preprocessing first evaluates whether distinct edge lines (e.g., field ridges or crop boundaries) are present in the imagery. Clear edges facilitate accurate parcel boundary detection, while blurred edges may compromise verification results.

- Manual Revision: Discrepancies may exist between current parcel maps and the latest aerial imagery. Technical staff are required to conduct visual comparisons and perform manual adjustments to align parcel boundaries with updated image data (Interactive Digital Technologies Inc., 2023).

Parcels are stored as vector polygons in geographic files. However, two key reasons necessitate converting polylines to rasterized parcel boundary images:

1. Scale Consistency: Polylines in ArcGIS Pro dynamically adjust with zoom levels, causing unstable edge detection. Raster images maintain fixed pixel sizes, ensuring detection consistency.

2. Tool Compatibility: ArcGIS Pro's Export Training Data for Deep Learning tool primarily accepts raster inputs (Esri, n.d.). Using the Buffer tool, parcel polylines are converted to rasterized boundary images (2-meter and 6-meter widths) to meet model requirements and minimize scaling-induced errors.

Comparative tests revealed that models trained on 2-meter buffered raster images extracted more complete parcel edge features than those using 6-meter buffers (Interactive Digital Technologies Inc., 2023). These meticulous preprocessing steps ensure data accuracy, establishing a high-quality training dataset that lays a robust foundation for subsequent model training.

Generating training datasets

After preparing the training data, the Export Training Data for Deep Learning tool in ArcGIS Pro was used to extract image chips from existing agricultural parcel maps and corresponding DMCIII imagery, creating the training dataset required for the deep learning model. A total of 169,236 datasets were generated in this case (Interactive Digital Technologies Inc., 2023).

To enable computers to recognize specific land features through feature learning, pre-labeled data must be prepared (e.g., vector data in GIS or classified raster imagery). The Export Training Data for Deep Learning tool divides these data into small segments called image chips, each containing only the target features for the model to learn—such as specific land types (e.g., farmland, solar panels, fishponds) or characteristics (e.g., boundaries, textures) (Esri, n.d.).

For example, to train a model to identify farmland:

- GIS vector data outlining farmland boundaries are prepared.

- The tool crops these boundaries from aerial imagery, producing image chips that each contain a labeled farmland segment with metadata.

- The model learns to recognize farmland features by analyzing these labeled chips.

In summary, deep learning requires vast amounts of image chips for training. The Export Training Data for Deep Learning tool efficiently generates labeled chips from existing GIS data and remote sensing imagery.

Model training results

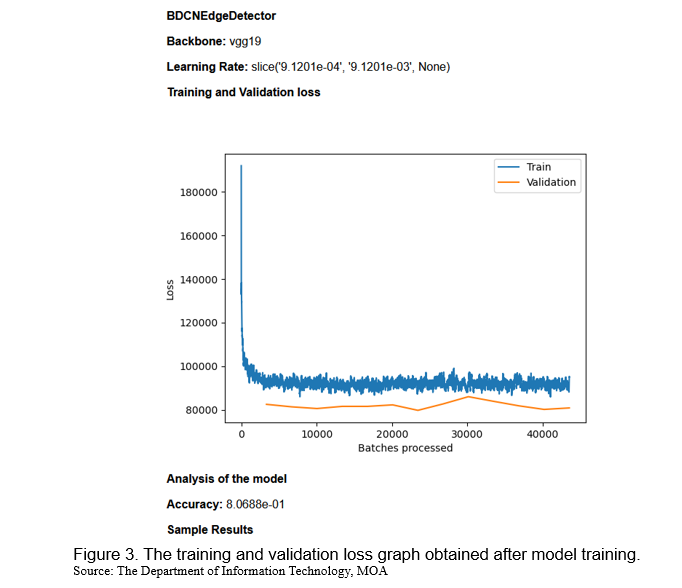

Using the prepared training dataset, model training commenced by configuring parameters in the Train Deep Learning Model tool. The training and validation loss curves indicate that the training loss consistently decreased with increasing batch iterations until stabilizing, showing model convergence. The close alignment between training and validation loss values suggests strong generalization capability (i.e., performance on unseen data).

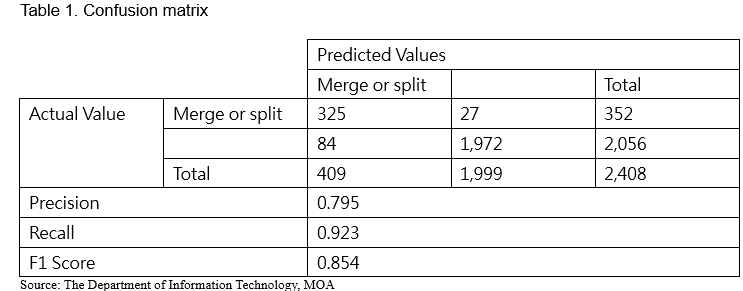

To assess the model’s boundary detection performance, an independent test dataset—excluded from the training phase—was evaluated using precision, recall, and F1 score metrics. Analysis of the test frame 94182069_O230404a_19_hr4_moa (containing 2,408 parcels in Wandan Township, Pingtung County) revealed the following results after automated detection and manual validation: precision was 79.5%, recall was 92.3%, and F1 Score was 85.4%.

The confusion matrix revealed that approximately 13% of the 2,408 parcels required manual revision due to discrepancies between updated aerial imagery and existing agricultural parcel maps. Extrapolating this to Taiwan's 2.7 million parcels, an estimated 351,000 parcels would need manual verification. This model significantly reduces the manual workload, enabling rapid revision of urgent or mission-critical areas for immediate application in survey operations.

Add-In tool development

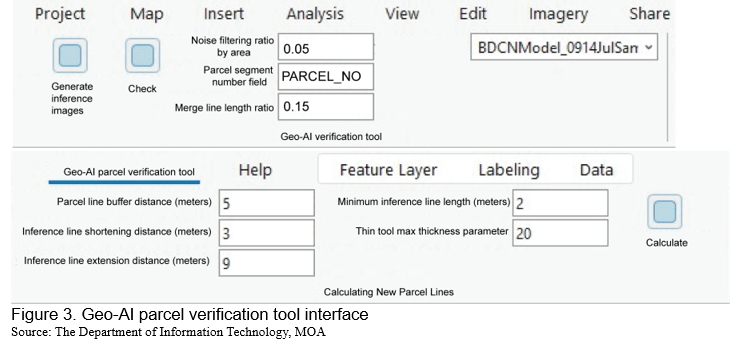

After model training, new imagery is typically interpreted using Geoprocessing tools such as "Detect Objects Using Deep Learning" or "Classify Objects Using Deep Learning" (Esri, n.d.). Afterwards, digital mapping personnel manually decide how to update the existing agricultural parcel map by comparing the interpretation results with the old map—determining whether to merge, split, or perform both operations on each parcel. This approach, however, often leads to low editing efficiency, because it requires extra time for personnel to evaluate the appropriate modification for every parcel.

To facilitate the use of the trained model, the research team developed an ArcGIS Pro Add-In tool. The concept behind this tool is to compare the model’s interpretation result (provided as a raster image) with the existing agricultural parcel map (in polygon format). Prior to this comparison, the parcel map is segmented along its turning points into line features and buffered by 1 meter. Then, the "Zonal Statistics as Table" tool is employed to calculate the mode of pixel values along each line in the raster image (Esri, n.d.). In simple terms, this process assesses the degree of overlap between each line and the interpretation result; if an overlap is detected, that line is retained during editing, whereas if no overlap exists, the line is deemed to be removed. The information indicating the need for merging, splitting, or both is then directly annotated and written into the attribute data of the parcels scheduled for revision.

For example, once the model has interpreted the latest aerial imagery and the results are compared with the existing parcel map, three scenarios typically emerge. The first is a merge: this indicates that in the previous crop planting, the land was divided into at least two crop zones, but in the new aerial imagery, the area is planted with only one crop. The second scenario is a split: in the old parcel map, a single-crop planting led to a single parcel, but the new imagery reveals that at least two different crops are now being cultivated on the same land, necessitating a subdivision. The third scenario is a combination of split and merge, which is most observed when adjacent parcels display the characteristics of both previous cases.

While the model demonstrates strong capability in detecting parcel boundaries, factors such as lighting, angles, or atmospheric conditions in the latest aerial imagery may still cause misjudgments or omissions. When such cases are encountered during revisions, they can be flagged in the attribute data and provided to the model development team for further retraining to enhance accuracy.

CONCLUSION

This study successfully developed a Geo-AI tool for verifying agricultural parcel maps in Taiwan by integrating deep learning (BDCN) with the ArcGIS Pro platform. The tool effectively identifies parcel boundaries in aerial imagery and pinpoints parcels requiring manual revision, drastically reducing labor demands and overall workload. Experimental validation in a test frame in Wandan Township, Pingtung County, achieved a precision of 0.795, a recall of 0.923, and an F1 score of 0.854. Applying this model to Taiwan's 2.7 million parcels is projected to reduce manual verification to approximately 351,000 parcels, significantly enhancing revision efficiency.

To further improve usability, the research team developed an ArcGIS Pro add-in tool that directly embeds model interpretation results into parcel attribute data and displays them as map labels. This enables editors to understand revision priorities, thereby boosting operational efficiency intuitively. Additionally, the tool allows for feedback on misjudged or missed cases during revisions to be provided to the model development team, enabling retraining and refinement.

Overall, this study not only successfully developed an efficient agricultural parcel map verification tool but also established a maintainable and continuously updatable framework, providing effective management and application for Taiwan's agricultural base maps. The parcel map data are now integrated into the National GIS Cadastral Map Supply System, accessible to the Ministry of Agriculture, its affiliated units, and partner organizations. The DIT of MOA has further developed tools, including a Field Disaster Survey App and a Motorcycle Street-View Imaging System, to collect crop damage photos in the field. These photos are uploaded and processed by the backend system to extract GPS timestamps, coordinates, camera tilt and elevation angles, and then overlaid with corresponding parcels on a mapping platform, documenting the appearance of Taiwan's farmland across different growing seasons.

Future efforts will focus on labeling training samples from archived aerial and satellite imagery to develop Artificial Intelligence (AI) recognition models for long-term crops and major crops. Automated workflows for identification and model retraining will enable rapid and precise crop monitoring and management. These AI models will accurately identify crop growth status and area changes, generating parcel-level statistics to inform agricultural policy and improve farming strategies, ultimately enhancing productivity.

REFERENCES

Agricultural Engineering Research Center (2023). 2023 National Farmland Plot Map Update Project, Ministry of Agriculture.

Agricultural Engineering Research Center (2021). 2021 National Farmland Plot Map Integration and Revision Project. Council of Agriculture, Executive Yuan.

Du, Z., Yang, J., Ou, C., & Zhang, T. (2019). Smallholder Crop Area Mapped with a Semantic Segmentation Deep Learning Method. Remote Sensing, 11(7), 888.

Esri. (n.d.). Train Deep Learning Model (Image Analyst)—ArcGIS Pro Documentation. Retrieved from https://pro.arcgis.com/en/pro-app/latest/tool-reference/image-analyst/train-deep-learning-model.htm

Esri. (n.d.). Pixel Classification—ArcGIS Pro Documentation. Retrieved from https://pro.arcgis.com/en/pro-app/latest/tool-reference/image-analyst/pixel-classification.htm

Esri. (n.d.). Edge Detection with ArcGIS API for Python. Retrieved from https://developers.arcgis.com/python/sample-notebooks/edge-detection/

He, J., Zhang, S., Yang, M., Shan, Y., & Huang, T. (2019). Bi-Directional Cascade Network for Perceptual Edge Detection. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

Interactive Digital Technologies Inc. (2023). The Project of the Integrated Applications with Agricultural Geographic Information System (AGIS). Ministry of Agriculture.

Lin, L., & Zhang, C. (2021). Land Parcel Identification. In L. Di, & B. Üstündağ (eds.), Agro-geoinformatics (pp. 163-172). Springer, Cham.

Utilizing Artificial Intelligence (AI) Tools to Develop Agricultural Parcel Maps for Assisting Crop Surveys

ABSTRACT

This paper explains that Taiwan's agricultural management policies have long used cadastral maps as the foundational geographic data for farmland statistics. However, cadastral maps lack the flexibility to reflect changes in crop cultivation boundaries. The Department of Information Technology, Ministry of Agriculture of Taiwan, has developed agricultural parcel maps based on aerial imagery showing farmland cultivation patterns. By implementing a Bi-Directional Cascade Network (BDCN) edge detection model integrated into an ArcGIS Pro add-in tool, the system automates parcel boundary detection. This innovation resolves the historical challenges of high labor costs and time pressure when manually updating parcel boundaries using new aerial imagery. Experimental results from 2,408 parcels in Wandan Township, Pingtung County, demonstrate a 79.5% precision rate, 92.3% recall rate, and an F1 score of 85.4%. For nationwide updates across 2.7 million parcels, only 351,000 parcels (13% of the total) require manual verification. This study confirms that integrating geographic information systems (GIS) with deep learning enhances the timeliness of agricultural surveys, establishing a technical foundation for farmland management. Future work will employ deep learning to develop Artificial Intelligence (AI) recognition models for long-term crops and major crops, enabling rapid and precise crop monitoring and management.

Keywords: BDCN, agricultural parcel, GIS, ArcGIS add-in tool, agricultural management

INTRODUCTION

Taiwan's agricultural policies have historically relied on cadastral maps as the primary geographic reference. While cadastral maps serve multiple functions—such as land ownership management and urban-rural planning—they inadequately capture the diversity and dynamic changes in crop cultivation. To accurately and promptly obtain agricultural production information, policies are shifting toward parcel-based farmland surveys.

A parcel is defined as the smallest contiguous area visible in aerial imagery that cultivates a single crop. Compared to cadastral maps, parcels more accurately reflect actual farmland use and provide higher precision in agricultural statistics. Cadastral boundaries often diverge from real-world cultivation practices due to variations in crop growth cycles and farmers' adjustments to planting strategies based on market conditions. Consequently, crop area estimates derived from cadastral maps may deviate from actual cultivation areas. Agricultural parcel maps not only offer detailed farmland data but also integrate with modern technologies like remote sensing imagery and GIS tools to achieve faster and more accurate land-use information.

In 2021, the Department of Information Technology (DIT), Ministry of Agriculture (MOA), initiated the development of Taiwan's first nationwide agricultural parcel map. Using cadastral maps and national land use survey maps as the base, technicians overlaid aerial imagery and manually revised boundaries to align with crop cultivation patterns, resulting in approximately 2.7 million parcels (Agricultural Engineering Research Center, 2021). This process demanded substantial time, labor, and technical precision. To address annual changes in farmland characteristics, a deep learning-based edge detection model (the GEO-AI tool) was developed in 2022 as an ArcGIS Pro add-in tool. The GEO-AI tool identifies parcels requiring manual updates from the national dataset, allowing staff to verify and edit boundaries based on detection results (Interactive Digital Technologies Inc., 2023). This hybrid approach—combining automated detection with manual verification—improves efficiency (Lin & Zhang, 2021) while ensuring the accuracy of agricultural parcel maps, enabling timely reflection of actual land use.

The accuracy of the GEO-AI tool directly impacts the quality of each agricultural parcel map version. By collecting misjudged and overlooked cases during revisions and retraining the model with annotated training data, the tool's interpretive capabilities are continuously enhanced. This iterative process allows the GEO-AI tool to identify future changes in land use more precisely. In summary, the DIT of MOA aims not only to establish a national agricultural parcel map but also to create a sustainable system for ongoing maintenance and updates. Through the GEO-AI tool, systematic data collection, and model refinement, Taiwan's agricultural resources can be managed more effectively, laying a robust foundation for agricultural modernization and sustainable development.

DATA PROCESSING AND FUNCTION DEVELOPMENT

To develop the parcel map verification tool, before model training, we utilized DMCIII imagery provided by the Aerial Survey and Remote Sensing Branch, Forestry and Nature Conservation Agency, Ministry of Agriculture. The images were captured between December 6, 2022, and April 4, 2023, covering 40 frames at a scale of 1:5,000 across six counties/cities: Kaohsiung City, Hsinchu County, Changhua County, Yunlin County, Pingtung County, and Hualien County. The total number of parcels included was 50,073.

For this model development, the DIT of MOA primarily employed the Bi-Directional Cascade Network (BDCN) method. BDCN is a deep learning-based edge detection technology that mimics the sharp vision of an eagle, precisely identifying boundaries in imagery. Inspired by the human visual system—which effortlessly discerns objects at varying distances and clarity levels—BDCN simultaneously processes edges across multiple scales and resolutions. Unlike traditional single-scale detection methods, BDCN acts like wearing multiple pairs of glasses with different prescriptions, ensuring no edge escapes detection (He et al., 2019).

The BDCN architecture is built upon a VGG16-based Convolutional Neural Network (CNN), divided into hierarchical convolutional layers, also known as convolutional blocks. Each block extracts distinct features from the imagery, progressively deepening the network's understanding. Additionally, BDCN incorporates a Scale Enhancement Module (SEM), functioning as a "zoom lens" to adjust observation scales. Through the SEM, the model extracts features at varying resolutions, enabling precise boundary identification (Esri, n.d.).

By integrating BDCN with ArcGIS and combining deep learning resources with a graphical interface, the technical barrier for edge detection is significantly lowered. This integration allows users to accurately identify land feature boundaries—such as agricultural parcels—while leveraging ArcGIS's spatial analysis capabilities. Through the graphical interface, users can intuitively perform parcel map verification, delineation, area measurement, and change analysis, thereby enhancing workflow efficiency and output quality. This technology proves particularly valuable for applications requiring precise land boundary extraction and detailed spatial analysis.

Data pre-processing

In the model training pipeline, the data preprocessing stage is critical. This phase focuses on enhancing features related to agricultural parcel boundaries in the imagery, such as field ridges, roads, and crop division lines. Clearer boundary features improve model recognition accuracy (Du et al., 2019). Specifically:

Parcels are stored as vector polygons in geographic files. However, two key reasons necessitate converting polylines to rasterized parcel boundary images:

1. Scale Consistency: Polylines in ArcGIS Pro dynamically adjust with zoom levels, causing unstable edge detection. Raster images maintain fixed pixel sizes, ensuring detection consistency.

2. Tool Compatibility: ArcGIS Pro's Export Training Data for Deep Learning tool primarily accepts raster inputs (Esri, n.d.). Using the Buffer tool, parcel polylines are converted to rasterized boundary images (2-meter and 6-meter widths) to meet model requirements and minimize scaling-induced errors.

Comparative tests revealed that models trained on 2-meter buffered raster images extracted more complete parcel edge features than those using 6-meter buffers (Interactive Digital Technologies Inc., 2023). These meticulous preprocessing steps ensure data accuracy, establishing a high-quality training dataset that lays a robust foundation for subsequent model training.

Generating training datasets

After preparing the training data, the Export Training Data for Deep Learning tool in ArcGIS Pro was used to extract image chips from existing agricultural parcel maps and corresponding DMCIII imagery, creating the training dataset required for the deep learning model. A total of 169,236 datasets were generated in this case (Interactive Digital Technologies Inc., 2023).

To enable computers to recognize specific land features through feature learning, pre-labeled data must be prepared (e.g., vector data in GIS or classified raster imagery). The Export Training Data for Deep Learning tool divides these data into small segments called image chips, each containing only the target features for the model to learn—such as specific land types (e.g., farmland, solar panels, fishponds) or characteristics (e.g., boundaries, textures) (Esri, n.d.).

For example, to train a model to identify farmland:

In summary, deep learning requires vast amounts of image chips for training. The Export Training Data for Deep Learning tool efficiently generates labeled chips from existing GIS data and remote sensing imagery.

Model training results

Using the prepared training dataset, model training commenced by configuring parameters in the Train Deep Learning Model tool. The training and validation loss curves indicate that the training loss consistently decreased with increasing batch iterations until stabilizing, showing model convergence. The close alignment between training and validation loss values suggests strong generalization capability (i.e., performance on unseen data).

To assess the model’s boundary detection performance, an independent test dataset—excluded from the training phase—was evaluated using precision, recall, and F1 score metrics. Analysis of the test frame 94182069_O230404a_19_hr4_moa (containing 2,408 parcels in Wandan Township, Pingtung County) revealed the following results after automated detection and manual validation: precision was 79.5%, recall was 92.3%, and F1 Score was 85.4%.

The confusion matrix revealed that approximately 13% of the 2,408 parcels required manual revision due to discrepancies between updated aerial imagery and existing agricultural parcel maps. Extrapolating this to Taiwan's 2.7 million parcels, an estimated 351,000 parcels would need manual verification. This model significantly reduces the manual workload, enabling rapid revision of urgent or mission-critical areas for immediate application in survey operations.

Add-In tool development

After model training, new imagery is typically interpreted using Geoprocessing tools such as "Detect Objects Using Deep Learning" or "Classify Objects Using Deep Learning" (Esri, n.d.). Afterwards, digital mapping personnel manually decide how to update the existing agricultural parcel map by comparing the interpretation results with the old map—determining whether to merge, split, or perform both operations on each parcel. This approach, however, often leads to low editing efficiency, because it requires extra time for personnel to evaluate the appropriate modification for every parcel.

To facilitate the use of the trained model, the research team developed an ArcGIS Pro Add-In tool. The concept behind this tool is to compare the model’s interpretation result (provided as a raster image) with the existing agricultural parcel map (in polygon format). Prior to this comparison, the parcel map is segmented along its turning points into line features and buffered by 1 meter. Then, the "Zonal Statistics as Table" tool is employed to calculate the mode of pixel values along each line in the raster image (Esri, n.d.). In simple terms, this process assesses the degree of overlap between each line and the interpretation result; if an overlap is detected, that line is retained during editing, whereas if no overlap exists, the line is deemed to be removed. The information indicating the need for merging, splitting, or both is then directly annotated and written into the attribute data of the parcels scheduled for revision.

For example, once the model has interpreted the latest aerial imagery and the results are compared with the existing parcel map, three scenarios typically emerge. The first is a merge: this indicates that in the previous crop planting, the land was divided into at least two crop zones, but in the new aerial imagery, the area is planted with only one crop. The second scenario is a split: in the old parcel map, a single-crop planting led to a single parcel, but the new imagery reveals that at least two different crops are now being cultivated on the same land, necessitating a subdivision. The third scenario is a combination of split and merge, which is most observed when adjacent parcels display the characteristics of both previous cases.

While the model demonstrates strong capability in detecting parcel boundaries, factors such as lighting, angles, or atmospheric conditions in the latest aerial imagery may still cause misjudgments or omissions. When such cases are encountered during revisions, they can be flagged in the attribute data and provided to the model development team for further retraining to enhance accuracy.

CONCLUSION

This study successfully developed a Geo-AI tool for verifying agricultural parcel maps in Taiwan by integrating deep learning (BDCN) with the ArcGIS Pro platform. The tool effectively identifies parcel boundaries in aerial imagery and pinpoints parcels requiring manual revision, drastically reducing labor demands and overall workload. Experimental validation in a test frame in Wandan Township, Pingtung County, achieved a precision of 0.795, a recall of 0.923, and an F1 score of 0.854. Applying this model to Taiwan's 2.7 million parcels is projected to reduce manual verification to approximately 351,000 parcels, significantly enhancing revision efficiency.

To further improve usability, the research team developed an ArcGIS Pro add-in tool that directly embeds model interpretation results into parcel attribute data and displays them as map labels. This enables editors to understand revision priorities, thereby boosting operational efficiency intuitively. Additionally, the tool allows for feedback on misjudged or missed cases during revisions to be provided to the model development team, enabling retraining and refinement.

Overall, this study not only successfully developed an efficient agricultural parcel map verification tool but also established a maintainable and continuously updatable framework, providing effective management and application for Taiwan's agricultural base maps. The parcel map data are now integrated into the National GIS Cadastral Map Supply System, accessible to the Ministry of Agriculture, its affiliated units, and partner organizations. The DIT of MOA has further developed tools, including a Field Disaster Survey App and a Motorcycle Street-View Imaging System, to collect crop damage photos in the field. These photos are uploaded and processed by the backend system to extract GPS timestamps, coordinates, camera tilt and elevation angles, and then overlaid with corresponding parcels on a mapping platform, documenting the appearance of Taiwan's farmland across different growing seasons.

Future efforts will focus on labeling training samples from archived aerial and satellite imagery to develop Artificial Intelligence (AI) recognition models for long-term crops and major crops. Automated workflows for identification and model retraining will enable rapid and precise crop monitoring and management. These AI models will accurately identify crop growth status and area changes, generating parcel-level statistics to inform agricultural policy and improve farming strategies, ultimately enhancing productivity.

REFERENCES

Agricultural Engineering Research Center (2023). 2023 National Farmland Plot Map Update Project, Ministry of Agriculture.

Agricultural Engineering Research Center (2021). 2021 National Farmland Plot Map Integration and Revision Project. Council of Agriculture, Executive Yuan.

Du, Z., Yang, J., Ou, C., & Zhang, T. (2019). Smallholder Crop Area Mapped with a Semantic Segmentation Deep Learning Method. Remote Sensing, 11(7), 888.

Esri. (n.d.). Train Deep Learning Model (Image Analyst)—ArcGIS Pro Documentation. Retrieved from https://pro.arcgis.com/en/pro-app/latest/tool-reference/image-analyst/train-deep-learning-model.htm

Esri. (n.d.). Pixel Classification—ArcGIS Pro Documentation. Retrieved from https://pro.arcgis.com/en/pro-app/latest/tool-reference/image-analyst/pixel-classification.htm

Esri. (n.d.). Edge Detection with ArcGIS API for Python. Retrieved from https://developers.arcgis.com/python/sample-notebooks/edge-detection/

He, J., Zhang, S., Yang, M., Shan, Y., & Huang, T. (2019). Bi-Directional Cascade Network for Perceptual Edge Detection. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

Interactive Digital Technologies Inc. (2023). The Project of the Integrated Applications with Agricultural Geographic Information System (AGIS). Ministry of Agriculture.

Lin, L., & Zhang, C. (2021). Land Parcel Identification. In L. Di, & B. Üstündağ (eds.), Agro-geoinformatics (pp. 163-172). Springer, Cham.